Humans are social creatures. We live near each other and regularly trade, swap, and share things. Need a cup of sugar? Ask your neighbor. Car in the shop? Borrow your roommate’s.

But what if instead of asking for ingredients or a ride, your friend wanted to borrow something more personal — like your arms?

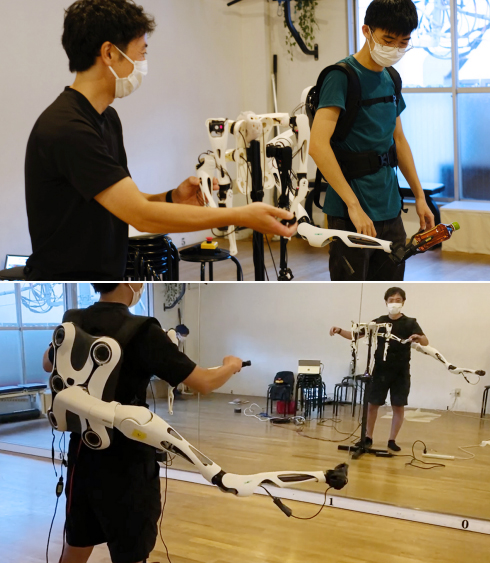

The study: To find out how people might feel about having and sharing robotic body parts, researchers at the University of Tokyo developed Jizai Arms, a backpack-like system capable of supporting up to six robotic arms at a time.

They then got together for two days of role-playing sessions in which they spent time wearing their new arms and swapping them with others, documenting their thoughts and feelings in diaries, sketches, photos, and more.

“I felt more of a sense of loss after it was detached.”

Human augmentation researcher

How they work: The robotic arms work by mirroring the movements of a smaller controller arm — lift the bottom right arm on the controller, and the bottom right arm strapped to your back will raise.

Unlike other examples of human augmentation that we’ve seen, the Jizai Arms aren’t going to give you extra abilities — you may be wearing two extra arms, but you’ll need to use your two real arms to control them or hand control over to someone else.

Still, the arms were easy to attach and detach, which was key to exploring how people might feel about gaining or losing the limbs.

Role playing: After collaborating on the design and construction of the Jizai Arms, the researchers spent a few hours wearing up to four of the robotic arms in a room with a mirrored wall, which allowed them to see how they looked in the system.

The following day, they spent time using the arms in a room without mirrors. During this session, they focused on the sensations felt while wearing the arms.

During these role-playing sessions, the researchers used controllers to move their own arms and those worn by others. They experienced attaching and detaching the arms, and played out scenarios in which they completed tasks using the arms and exchanged them upon request.

The researchers documented their thoughts and feelings throughout the experience, and included excerpts in their paper on the project (participants are referred to with labels such as R-1, D-1, and SA):

- “I didn’t feel much of anything after [the arm] was attached, just a little heavier,” said R-1, a human augmentation researcher. “But I felt more of a sense of loss after it was detached.”

- “I felt like I could move the arms with the controller more fully when I was manipulating someone else’s robotic arms,” said D-2, a product designer.

- “When D-1 manipulated one of my robotic arms and handed over the toy to [SA], even though I was distracted by my phone, I was surprised and found it convenient that my additional arms did the work for me!” reported R-1.

- “I felt like I had the upper hand when my opponent’s arms were reduced,” said D-1, another product designer.

The bottom line: While the Jizai Arms aren’t going to have any sort of “real world” impact, the University of Tokyo study highlights an important gap in human augmentation research, namely the need to study not only how we might feel using robotic body parts but also sharing them.

“For scientists investigating augmented humans, a study on the long-term use of the Jizai Arms may provide interesting insight into how the body image in the brain shifts through the experience of becoming a social digital cyborg,” the researchers write.

We’d love to hear from you! If you have a comment about this article or if you have a tip for a future Freethink story, please email us at [email protected].