An AI trained to assess heart scans outperformed human technicians, both in terms of accuracy and efficiency, in a first-of-its-kind trial.

“There’s a lot of hype and a lot of excitement around AI, but really this is the first piece of very concrete evidence that this is ready for clinical use,” said trial leader David Ouyang, a cardiologist at Cedars-Sinai Medical Center.

The challenge: Measuring the percentage of available blood that leaves the heart with each pump — known as the “left ventricular ejection fraction” (LVEF) — can help doctors assess heart function and determine treatment plans for cardiovascular disease.

“There has been much excitement about the use of AI in medicine, but the technologies are rarely assessed in prospective clinical trials.”

David Ouyang

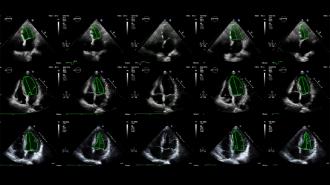

Traditionally, LVEF is determined using echocardiograms, which are ultrasound videos of the heart. A trained sonographer will assess LVEF based on the echocardiogram, and a cardiologist will then review the assessment and potentially adjust it.

This two-step process is necessary for accurate measurements, but it’s also tedious and time-consuming.

Echonet AI: In 2020, Stanford University researchers published a study detailing how they’d trained a deep learning algorithm, dubbed “Echonet,” to assess LVEF from echocardiogram videos.

For a recent blinded, randomized clinical trial at Cedars-Sinai Medical Center, they compared their AI’s LVEF assessments to ones made by sonographers with an average of 14 years experience.

The cardiologists made significant adjustments to 27% of the sonographers’ assessments, but just 17% of the AI’s.

According to the researchers, this was the first ever randomized trial of an AI algorithm in cardiology — and Echonet performed even better than they’d hoped.

“There has been much excitement about the use of AI in medicine, but the technologies are rarely assessed in prospective clinical trials,” said Ouyang, who presented the results of the trial at ESC Congress 2022.

“This trial was powered to show non-inferiority of the AI compared to sonographer tracings,” he added, “and so we were pleasantly surprised when the results actually showed superiority with respect to the pre-specified outcomes.”

The trial: During the trial, nearly 3,500 adults underwent echocardiograms. Half were assessed by Echonet and half by trained sonographers. Cardiologists then reviewed the assessments — without knowing if they came from the AI or a person.

The cardiologists made significant adjustments (more than 5%) to 27% of the sonographers’ assessments, but just 17% of the AI’s. Echonet’s assessments were also closer to the cardiologists’ final decisions, with an average difference of 2.8 points compared to 3.8 points for the sonographers

“We think of this as if the sonographer is able to use a calculator instead of an abacus…there’s no one being replaced in this workflow.”

David Ouyang

The cardiologists also spent less time reviewing the AI’s LVEF assessments, and when asked to determine whether an assessment came from Echonet or a sonographer, they only guessed correctly about a third of the time — in 43% of cases, the doctors said they were too uncertain to even guess.

“[That] both speaks to the strong performance of the AI algorithm as well as the seamless integration into clinical software,” said Ouyang. “We believe these are all good signs for future trial research in the field.”

Team effort: Echonet might have produced LVEF assessments more in line with the cardiologists’ and saved the doctors time, but that doesn’t mean it’s going to put sonographers out of work.

“A common question that is asked is: is this thing going mean that the sonographer or clinician is not useful? And the absolute answer is no,” said Ouyang.

“We think of this as if the sonographer is able to use a calculator instead of an abacus,” he continued. “It speeds [the process] up for them and it speeds it up for the cardiologist, and it also makes people more precise. But there’s no one being replaced in this workflow.”

We’d love to hear from you! If you have a comment about this article or if you have a tip for a future Freethink story, please email us at [email protected].