When you look out at the Milky Way, you’re viewing the local Universe in two different ways at once. Yes, you’re looking at the individual stars one-at-a-time, but you’re also looking at the entirety of the collection that they comprise when taken all together. This is a double-edged sword, however. It’s tempting to look for the oldest stars in the Milky Way, and to deduce that the galaxy is at least as old as the oldest stars found within it, but that’s merely one possibility: one that’s not necessarily correct.

When you find a few old trees in a forest, it’s possible that the forest is at least as old as the oldest trees, but it’s also possible that those trees predated the rest of the forest, which was planted (or otherwise arose) at some later time. Similarly, it’s possible that the oldest individual Milky Way stars were originally formed elsewhere in the Universe and only fell into what would become our galaxy at some later time.

Fortunately, we don’t have to guess anymore. The astronomical field of galactic archaeology has improved so much since the advent of the ESA’s Gaia mission that we can now definitively date the age of the Milky Way. We now know it formed no later than 800 million years after the Big Bang: when the Universe was just 6% of its present age.

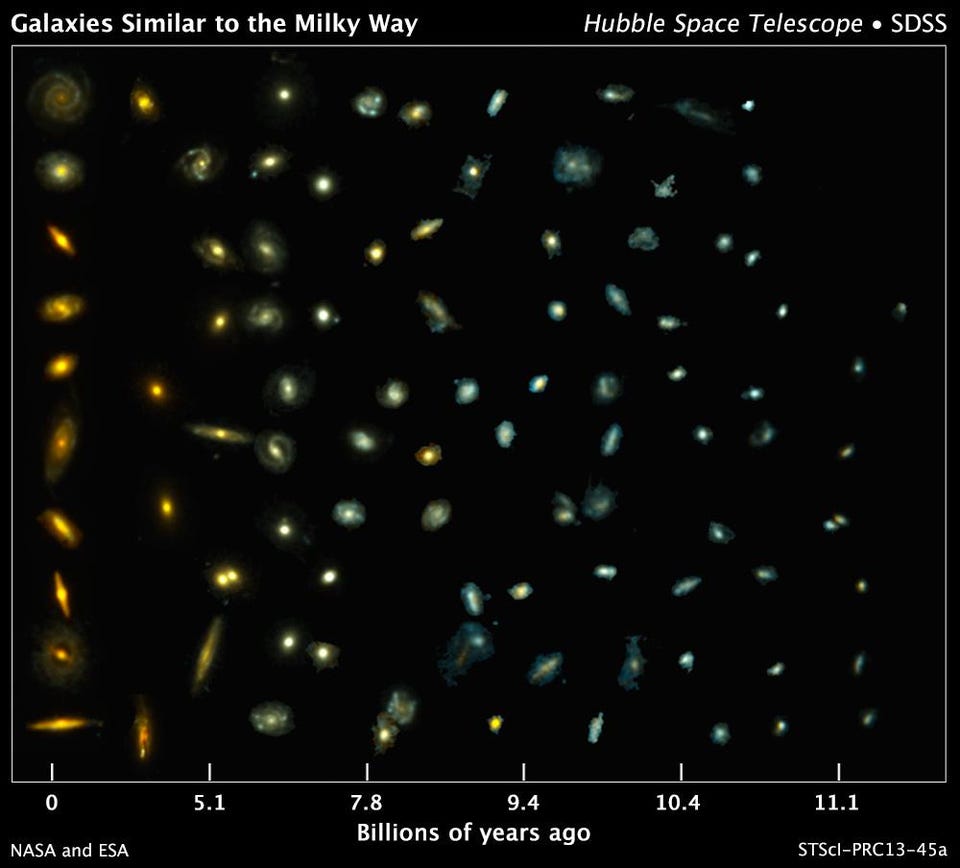

On a cosmic scale, it’s relatively easy to learn, in general, how the Universe grew up. With every observation that we take, we’re not only looking out across space, but back through time as well. As we look farther and farther away, we have to remember that it takes light a greater amount of time to journey to our eyes. Therefore, the more distant the object is that we’re observing, the farther back we’re seeing it in time.

Objects that are close to us, today, appear as they are 13.8 billion years after the Big Bang, but objects whose light has journeyed for hundreds of millions or even billions of years to reach our eyes appear as they were back when that light was emitted. As a result, by observing large numbers of galaxies from across cosmic time, we can learn how they’ve evolved over the Universe’s history.

On average, the farther away we look, we find galaxies that were:

- smaller,

- lower in mass,

- less clustered together,

- richer in gas,

- intrinsically bluer, rather than redder,

- with lower abundances of heavy elements,

- and with greater star-formation rates

than the ones we have today.

All of these properties are well-established to change relatively smoothly over the past 11 billion years. However, as we go back to even earlier times, we find that one of those changes reverses its trend: star-formation. The star-formation rate, averaged over the Universe, peaked when it was approximately 2.5-3.0 billion years old, meaning that not only has it declined ever since, but that up until that point, it was steadily increasing. Today, the Universe forms new stars at only 3% of the rate it did at its peak, but early on, the star formation rate was lower as well, and it’s easy to comprehend why.

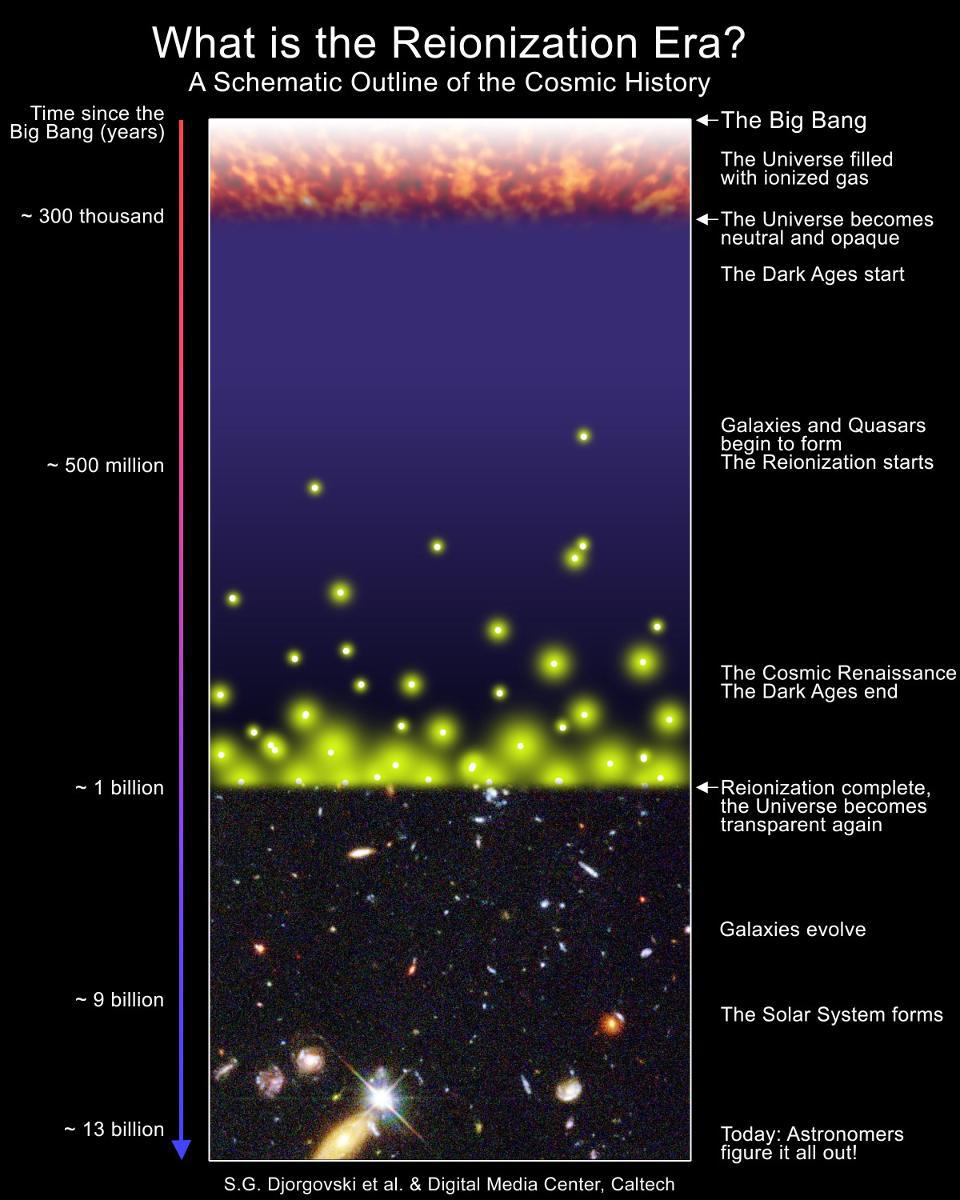

The Universe started off more uniform, as well as hotter and denser. As it expanded, rarified, cooled, and gravitated, it began to grow the large-scale structures we see today. In the beginning, there were no stars or galaxies, only the seeds that would later grow into them: overdense regions of the Universe, with slightly more matter than the cosmic average. Although there were a few very rare regions that began forming stars just a few tens of millions of years after the Big Bang, on average it takes hundreds of millions of years for that to occur.

And yet, it’s so difficult to get to that very first generation of stars that we still haven’t discovered them. There are two main reasons for that:

- the Universe forms neutral atoms just 380,000 years after the Big Bang, and enough hot, young stars need to form to reionize all of those atoms before the starlight becomes visible,

- and the expansion of the Universe is so severe that, when we look back far enough, even light emitted in the ultraviolet gets stretched beyond the near-infrared capabilities of observatories like Hubble.

As a result, the farthest back we’ve ever seen, as far as stars and galaxies go, still puts us at ~400 million years after the Big Bang, and they’re still not completely pristine; we can tell they’ve formed stars previously.

Nevertheless, we can be confident that just 150 million years later, at a time corresponding to 550 million years after the Big Bang, enough stars had been formed in order to fully reionize the Universe, making it transparent to visible light. The evidence is overwhelming, as galaxies beyond that threshold are seen to have an intervening, absorptive “wall of dust” in front of them, while galaxies closer to us than that point do not. While the James Webb Space Telescope will be remarkable for probing the pre-reionization Universe, we have a remarkable understanding of the Universe that existed from that point onward.

That’s the context in which we need to approach how our Milky Way formed: the context of the rest of the galaxies in the Universe. Yet it isn’t either the James Webb Space Telescope nor Hubble that allow us to reconstruct our own galaxy’s history, but rather a much more humble space telescope (technically, a dual telescope): the European Space Agency’s Gaia mission. Launched in 2013, Gaia was designed not to probe the distant Universe, but rather to measure, more precisely than ever, the properties and three-dimensional positions of more stars in our galaxy than ever before. To date, it has measured the parallaxes, proper motions, and distances to more than one billion stars within the Milky Way, revealing the properties of the stellar contents of our own galaxy with unprecedented comprehensiveness.

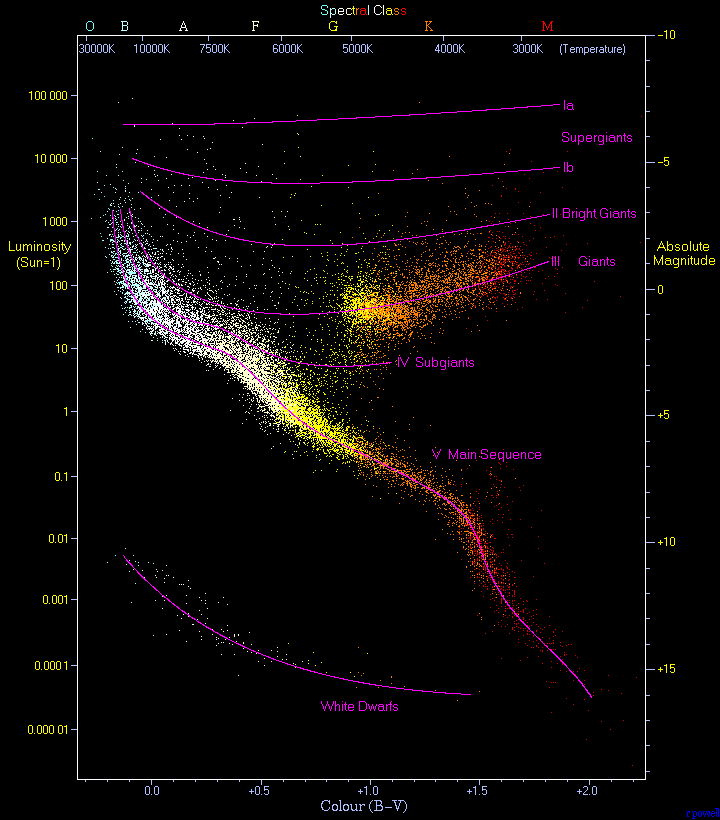

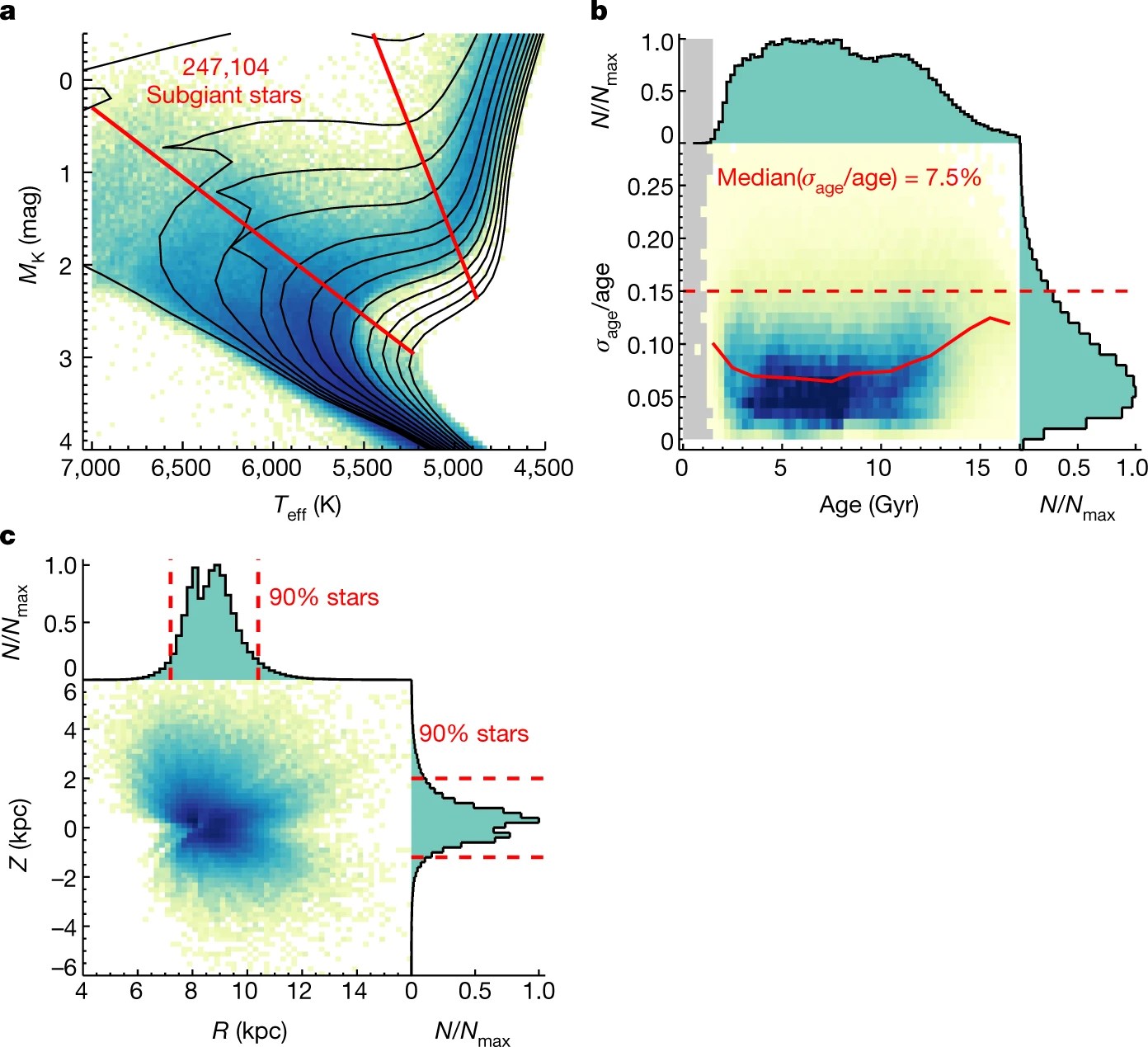

One of the most exciting things that Gaia has allowed us to do is to characterize the stars in our galaxy in a variety of ways, including when stars in different parts of the galaxy first formed. We do this by measuring both the color and brightness of the stars we see, and applying the rules of stellar evolution. When you map out a population of stars, you can plot “color” on the x-axis and “intrinsic brightness” on the y-axis, and if you do, you get a graph known as a color-magnitude (or, if you’re old school, Hertzsprung-Russell) diagram.

This diagram is vital to the understanding of how stars age. When a new population of stars forms, they come in a wide variety of masses: from dim, low-mass, cool, and red to bright, high-mass, hot, and blue. This distribution forms a “snaking” line that goes from the lower-right of the graph, for the lowest mass stars, up to the upper-left of the graph, for the highest mass stars. When you have a brand new cluster of stars that’s only just formed, that snaking line describes all of your stars, completely, and is known as the main sequence.

But as stars age, something spectacular happens. You might have heard the expression, “the flame that burns twice as bright lives just half as long,” but for stars, the situation is even worse. A star that’s twice as massive as another lives only one-eighth as long; a star’s lifetime on the main sequence is inversely proportional to the cube of its mass. As a result, the hottest, bluest stars burn through their fuel the fastest, and evolve off of that main sequence diagram. In fact, we can put together the age of any stellar population that formed all at once simply by looking at its color-magnitude diagram. Wherever that “turn-off” from the main sequence is, that’s how we can identify how long ago this population of stars formed.

So what happens, then, when a star “turns off” from the main sequence?

That’s synonymous, physically, with a star’s core running out of the hydrogen fuel that’s been burning, through nuclear fusion, into helium. That process powers all stars on the main sequence, and it does so at a slightly increasing but relatively constant rate over its lifetime. Inside the star, the radiation produced by these nuclear fusion reactions precisely balances the gravitational force that’s working to try and collapse the core of the star, and things remain in balance right up until the core starts running out of its hydrogen fuel.

At that point, a whole bunch of processes start to occur. When you’re running out of hydrogen, you have less material that’s capable of fusing together, so there’s suddenly less radiation being produced in the star’s core. As the radiation pressure drops, this balance that’s existed for so long — between radiation and gravity — starts to tip in gravity’s favor. As a result, the core begins to contract. Because of how big and massive the cores of stars are, and because they’re limited (by their size) to how quickly they can radiate energy away, the core starts to heat up as it contracts.

What happens when the core of a star heats up? Paradoxically, the rate of nuclear fusion inside increases, as there are more atomic nuclei in the star’s core that can get closer, have their quantum wavefunctions overlap, and can quantum tunnel into a more stable, heavier, more tightly bound nucleus, emitting energy in the process. Even as the core continues to exhaust its hydrogen, the star begins to brighten, transitioning into a relatively short-lived phase known as a subgiant: brighter than stars on the main sequence, but before the core heats up to begin helium fusion, which is the hallmark of the subsequent red giant phase.

Of the prominent stars in the night sky, Procyon, a nearby star just 11.5 light-years away and the 8th brightest star in the sky, is the best-known subgiant star. If you can identify a population of subgiants among a group of stars that formed all at once, you can be confident that you’re viewing the stars that are, both right now and also only in the very recent past, in the process of transitioning from a main sequence star into a red giant. And therefore, if you can characterize these subgiants and learn what their initial masses were, you can determine how long ago this specific population of stars all formed.

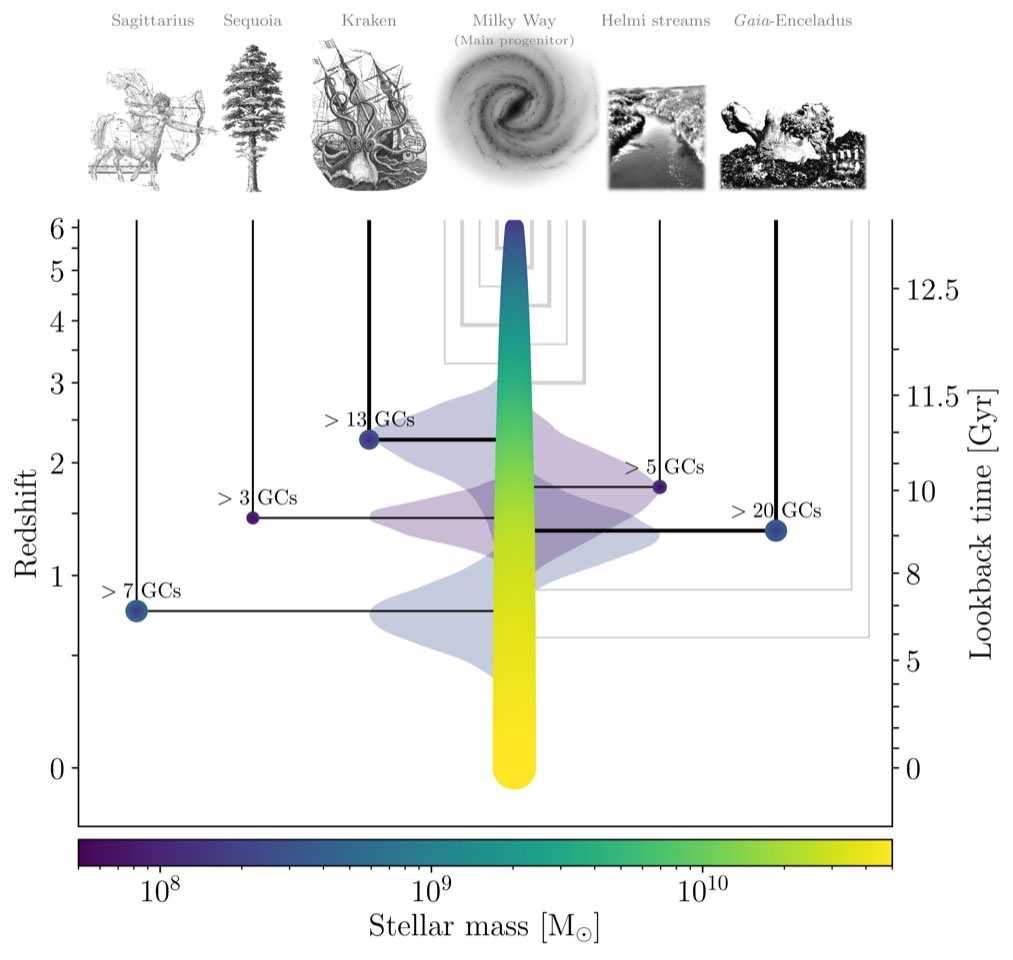

Although examining the Milky Way’s globular clusters had previously revealed when five previous minor mergers had occurred, as galaxies that were devoured earlier in our cosmic history bring their globular clusters with them, there are substantial uncertainties with that method.

For instance, we only see the survivors, and some globular clusters underwent multiple episodes of star formation.

For another, there are only somewhere around 150 globular clusters in the entire Milky Way, so statistics are limited.

But thanks to the spectacular data from Gaia, there were 247,104 subgiant stars mapped, with precisely-determined ages, in our Milky Way to examine.

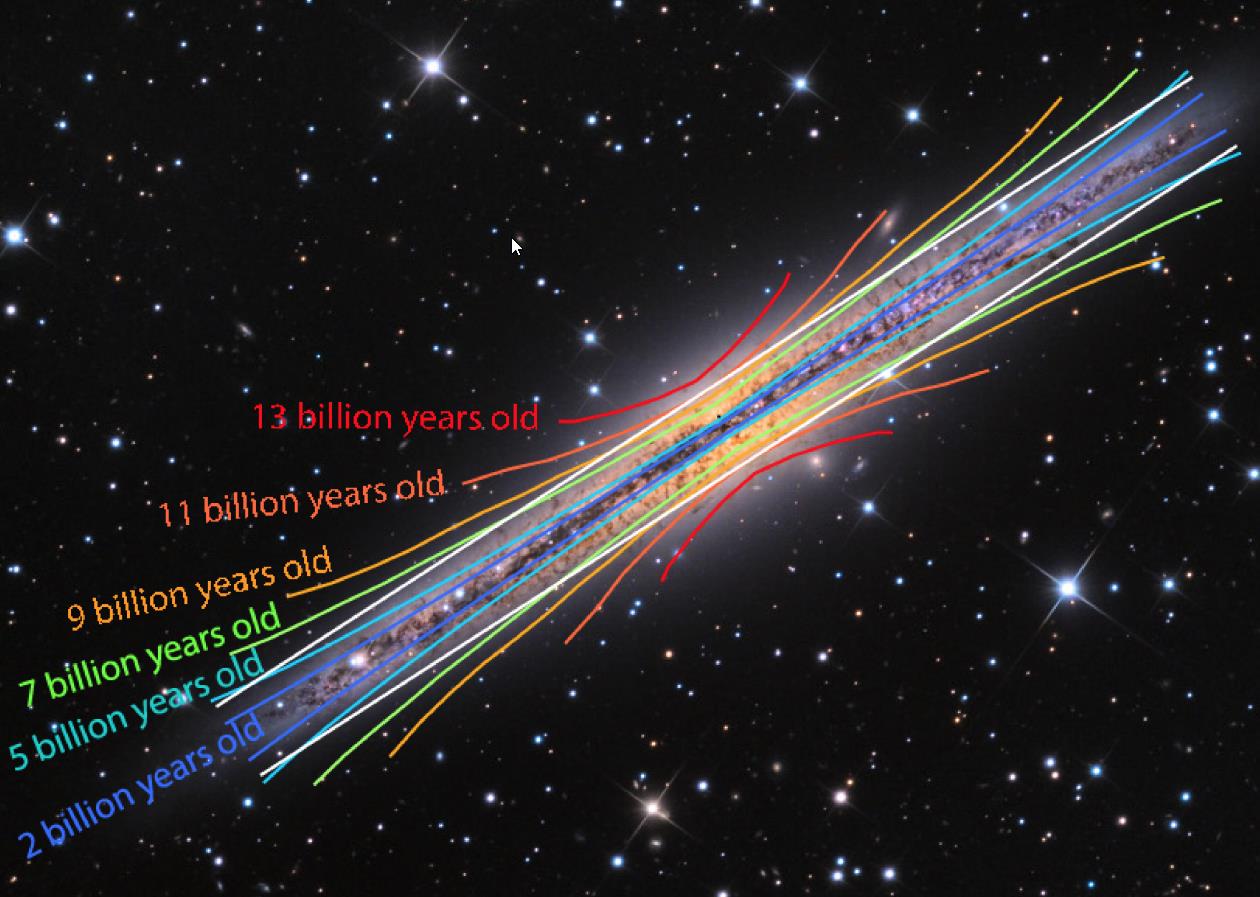

In a paper published in Nature in March of 2022, astronomers Maosheng Xiang and Hans-Walter Rix used the subgiant stars observed by Gaia to reconstruct the assembly history of the Milky Way. Their major findings are summarized below.

- The “thin disk” of the Milky Way, which is where most of the new stars have formed for the past ~6 billion years, is the younger part of the Milky Way.

- The galactic halo, whose inner part finished assembling about ~11 billion years ago — coincident with a merger of a large satellite — is an older component of the galaxy.

- That intermediate time, from ~11 billion years ago until ~6 billion years ago, saw the star-forming gas remain well-mixed within the galaxy, while continuous star-formation and stellar death saw the fraction of heavy elements (i.e., elements other than hydrogen and helium) steadily increase by a factor of 10.

- But the “thick disk” of the galaxy, which is much more diffuse and greater in extent than the more recent thin disk, began forming no later than just 800 million years after the Big Bang, or at least 13 billion years ago.

This represents the first evidence that a substantial portion of the Milky Way, as it exists today, formed so early on in our cosmic history.

Yes, there are absolutely stars in the Milky Way that are likely older than the Milky Way itself, but this is to be expected. The cosmic structures in the Universe, including large, modern galaxies like the Milky Way, form via a bottom-up scenario, where clouds of gas collapse to form star clusters first, then merge and accrete matter to become proto-galaxies, and then those proto-galaxies grow, attract one another, merge and/or accrete more matter, and grow into full-fledged galaxies. Even over the Milky Way’s copious history, we can identify no merger events where a galaxy larger than about a third of the Milky Way, at the time, joined what would grow into our galaxy.

If our galaxy, today, is a massive forest, then it’s clear that the first seeds had already sprouted and grown by the time the Universe was a mere 800 million years old: just 6% of its current age. The Milky Way may turn out to be even older, and as our understanding of both the early Universe and our local neighborhood improves, we may push the knowledge of our home galaxy’s existence back even farther. They often say that nothing lasts forever, and it’s true. But compared to our Solar System, which is only a third the age of our Universe, our home galaxy has existed, and will continue to exist, for almost as long as the Universe itself.

This article was reprinted with permission of Big Think, where it was originally published.