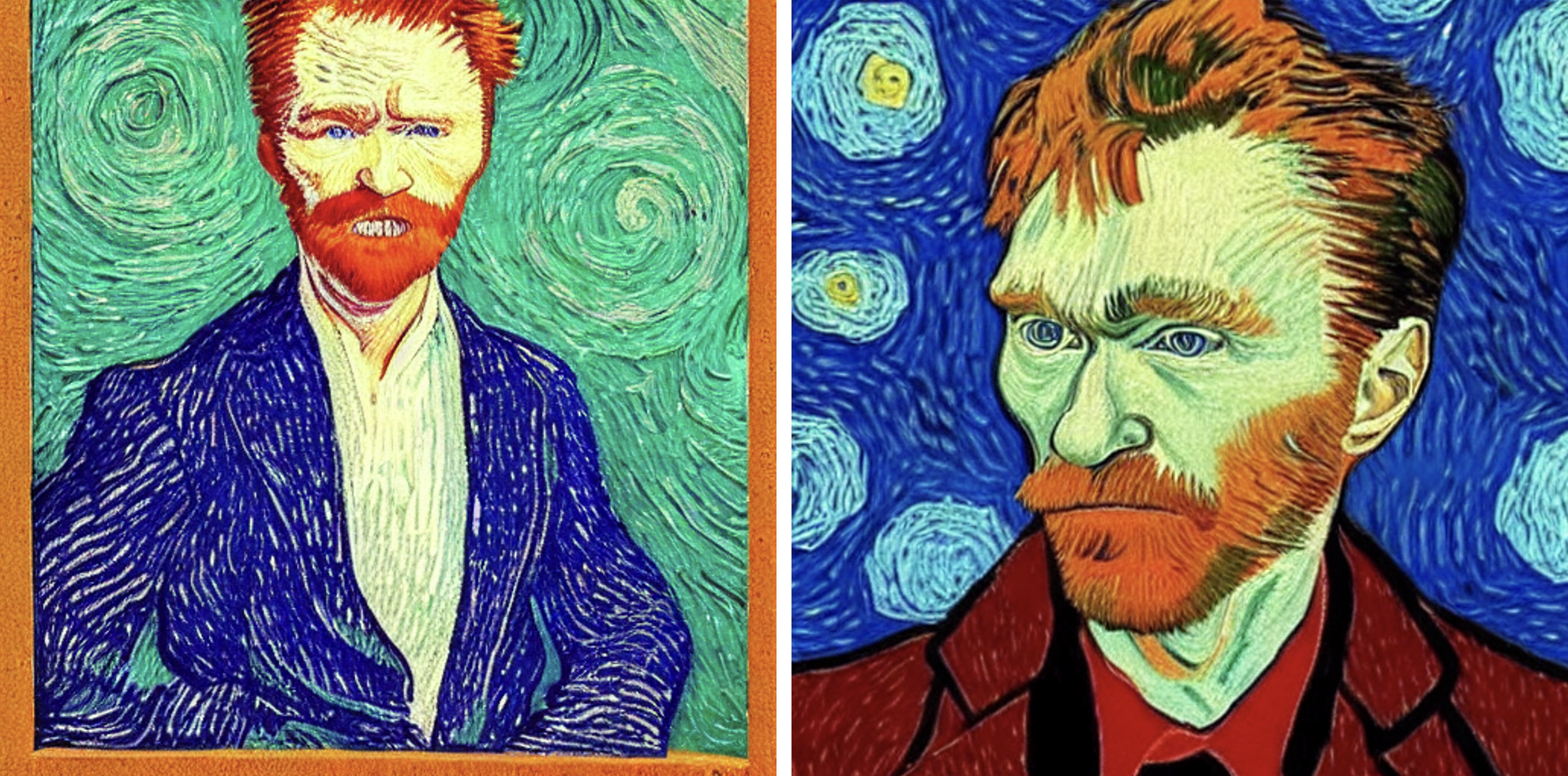

DALL-E is spooky good. Not so many years ago, it was easy to conclude that AI technologies would never generate anything of a quality approaching human artistic composition or writing. Now, the generative model programs that power DALL-E 2 and Google’s LaMDA chatbot produce images and words eerily like the work of a real person. Dall-E makes artistic or photorealistic images of a variety of objects and scenes.

How do these image-generating models work? Do they function like a person, and should we think of them as intelligent?

How diffusion models work

Generative Pre-trained Transformer 3 (GPT-3) is the bleeding edge of AI technology. The proprietary computer code was developed by the misnomered OpenAI, a Bay Area tech operation which began as a non-profit before turning for-profit and licensing GPT-3 to Microsoft. GPT-3 was built to produce words, but OpenAI tweaked a version to produce DALL-E and its sequel, DALL-E 2, using a technique called diffusion modeling.

Diffusion models perform two sequential processes. They ruin images, then they try to rebuild them. Programmers give the model real images with meanings ascribed by humans: dog, oil painting, banana, sky, 1960s sofa, etc. The model diffuses — that is, moves — them through a long chain of sequential steps. In the ruining sequence, each step slightly alters the image handed to it by the previous step, adding random noise in the form of scattershot meaningless pixels, then handing it off to the next step. Repeated, over and over, this causes the original image to gradually fade into static and its meaning to disappear.

We cannot predict how well, or even why, an AI like this works. We can only judge whether its outputs look good.

When this process is finished, the model runs it in reverse. Starting with the nearly meaningless noise, it pushes the image back through the series of sequential steps, this time attempting to reduce noise and bring back meaning. At each step, the model’s performance is judged by the probability that the less noisy image created at that step has the same meaning as the original, real image.

While fuzzing up the image is a mechanical process, returning it to clarity is a search for something like meaning. The model is gradually “trained” by adjusting hundreds of billions of parameters — think of little dimmer switch knobs that adjust a light circuit from fully off to fully on — within neural networks in the code to “turn up” steps that improve the probability of meaningfulness of the image, and to “turn down” steps that do not. Performing this process over and over on many images, tweaking the model parameters each time, eventually tunes the model to take a meaningless image and evolve it through a series of steps into an image that looks like the original input image.

To produce images that have associated text meanings, words that describe the training images are taken through the noising and de-noising chains along at the same time. In this way, the model is trained not only to produce an image with a high likelihood of meaning, but with a high likelihood of the same descriptive words being associated with it. The creators of DALL-E trained it on a giant swath of pictures, with associated meanings, culled from all over the web. DALL-E can produce images that correspond to such a weird range of input phrases because that’s what was on the internet.

The inner workings of a diffusion model are complex. Despite the organic feel of its creations, the process is entirely mechanical, built upon a foundation of probability calculations. (This paper works through some of the equations. Warning: The math is hard.)

Essentially, the math is about breaking difficult operations down into separate, smaller, and simpler steps that are nearly as good but much faster for computers to work through. The mechanisms of the code are understandable, but the system of tweaked parameters that its neural networks pick up in the training process is complete gibberish. A set of parameters that produces good images is indistinguishable from a set that creates bad images — or nearly perfect images with some unknown but fatal flaw. Thus, we cannot predict how well, or even why, an AI like this works. We can only judge whether its outputs look good.

Are generative AI models intelligent?

It’s very hard to say, then, how much DALL-E is like a person. The best answer is probably not at all. Humans don’t learn or create in this way. We don’t take in sensory data of the world and then reduce it to random noise; we also don’t create new things by starting with total randomness and then de-noising it. Towering linguist Noam Chomsky pointed out that a generative model like GPT-3 does not produce words in a meaningful language any differently from how it would produce words in a meaningless or impossible language. In this sense, it has no concept of the meaning of language, a fundamentally human trait.

Even if they are not like us, are they intelligent in some other way? In the sense that they can do very complex things, sort of. Then again, a computer-automated lathe can create highly complex metal parts. By the definition of the Turing Test (that is, determining if its output is indistinguishable from that of a real person), it certainly might be. Then again, extremely simplistic and hollow chat robot programs have done this for decades. Yet, no one thinks that machine tools or rudimentary chatbots are intelligent.

A better intuitive understanding of current generative model AI programs may be to think of them as extraordinarily capable idiot mimics. They’re like a parrot that can listen to human speech and produce not only human words, but groups of words in the right pattens. If a parrot listened to soap operas for a million years, it could probably learn to string together emotionally overwrought, dramatic interpersonal dialog. If you spent those million years giving it crackers for finding better sentences and yelling at it for bad ones, it might get better still.

Or consider another analogy. DALL-E is like a painter who lives his whole life in a gray, windowless room. You show him millions of landscape paintings with the names of the colors and subjects attached. Then you give him paint with color labels and ask him to match the colors and to make patterns statistically mimicking the subject labels. He makes millions of random paintings, comparing each one to a real landscape, and then alters his technique until they start to look realistic. However, he could not tell you one thing about what a real landscape is.

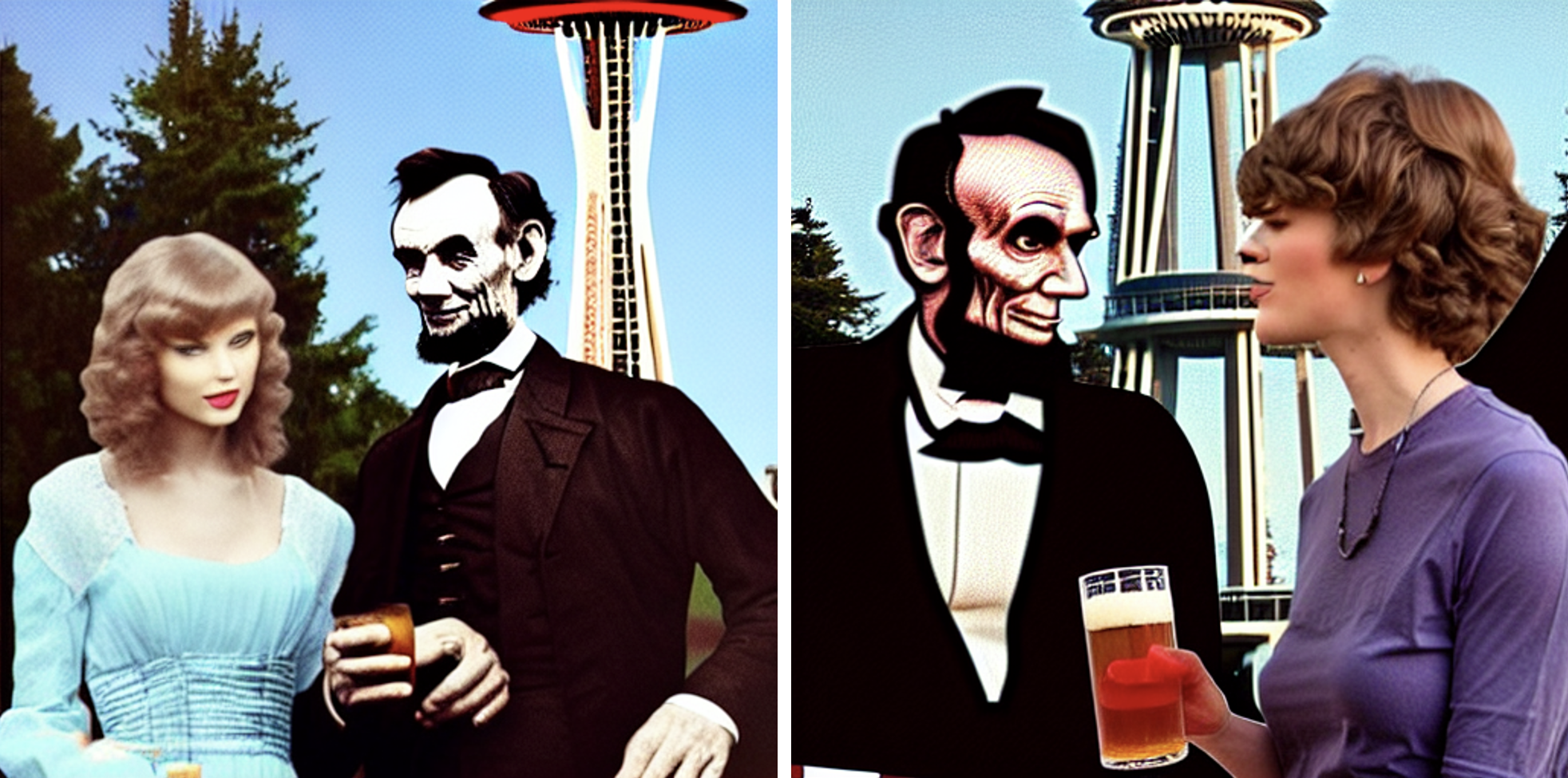

Another way to gain insight into diffusion models is to look at the images produced by a simpler one. DALL-E 2 is the most sophisticated of its kind. Version one of DALL-E often produced images that were nearly correct, but clearly not quite, such as dragon-giraffes whose wings did not properly attach to their bodies. A less powerful open source competitor is known for producing unsettling images that are dream-like and bizarre and not quite realistic. The flaws inherent in a diffusion model’s meaningless statistical mashups are not hidden like those in the far more polished DALL-E 2.

The future of generative AI

Whether you find it wondrous or horrifying, it appears that we have just entered an age in which computers can generate convincing fake images and sentences. It’s bizarre that a picture with meaning to a person can be generated from mathematical operations on nearly meaningless statistical noise. While the machinations are lifeless, the result looks like something more. We’ll see whether DALL-E and other generative models evolve into something with a deeper sort of intelligence, or if they can only be the world’s greatest idiot mimics.

This article was reprinted with permission of Big Think, where it was originally published.