In the world of AI, two remarkable technologies have made major strides over the last 18 months. One is called Large Language Models (LLMs), which enables AI systems to generate convincing essays and hold convincing conversations, and even write successful computer code, all with little or no human supervision. The second is called Generative Art, which enables automated software to create unique images and artwork from simple text prompts. Both are rooted in similar AI technology and have wowed the world with extremely impressive results.

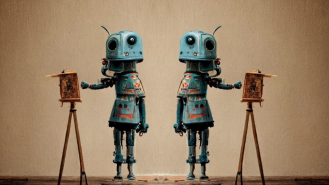

The headline image at the top of this article is one that I created using an AI-based generative artwork system. I gave the system some simple text prompts, describing the artwork I had in mind: a robot painting a picture. Within minutes, the system that I used (called Midjourney) produced a variety of computer-generated images, each an original and compelling creation.

Personally, I find the four “robot images” above to be artistic, evocative, and aesthetically pleasing. Still, these options did not quite capture the sentiment I was looking for, so I tried slightly different prompts. In the end, it took less than ten minutes to converge on the artwork used at the top of this piece. The process was much faster than contracting a human artist and remarkably flexible, allowing me to explore many directions in just a few minutes. And while this technology is currently limited to text and static images, in the near future, similar systems will generate video clips and music clips with equally impressive results.

Is AI more creative than human artists?

It is important to remember that generative AI systems are not creative. In fact, they’re not even intelligent. I entered a text prompt asking for artwork depicting a robot holding a paintbrush, but the software has no understanding of what a “robot” or a “paintbrush” is. It created the artwork using a statistical process that correlates imagery with the words and phrases in the prompt.

The results are impressive because generative AI systems are trained on millions of existing documents captured from the internet — images, essays, articles, drawings, paintings, photographs. Sometimes these systems filter out offensive content before training to avoid producing offensive results, but in general, the dataset is fairly comprehensive, enabling the software to create artwork in a wide range of styles.

If a spaceship full of entrepreneurial aliens showed up on Earth and asked humanity to contribute our collective works to a database so they could generate derivative artifacts for profit, we would likely ask for compensation.

Louis Rosenberg

I don’t mean to imply these systems are unimpressive; they are amazing technologies and profoundly useful. But they are just not “creative” in the way we humans think of creativity. This may seem hard to believe, as the robot images above are clearly original pieces, instilled with character and emotion.

From that perspective, it is hard to deny that the software produced authentic artwork, and yet the AI did not feel anything while creating it, nor did it draw upon any inherent artistic sensibilities. The same is true of generative systems that produce text. The output may read smoothly, use effective and colorful language, and have genuine emotional impact, but the AI itself has no understanding of the content it produced or the emotions it was aiming to evoke.

We all created the artwork

No human can be credited with crafting the work, although a person kicked off the process by providing the prompts; they are a collaborator of sorts, but not the artist. After all, each piece is generated with a unique style and composition. So who is responsible for crafting the work?

My view is that we all created that artwork — humanity itself.

Yes, the collective we call humanity is the artist. And I don’t just mean people who are alive today, but every person who contributed to the billions of creative artifacts that generative AI systems are trained upon. And it’s not just the countless human artists who had their original works vacuumed up and digested by these AI systems, but also members of the public who shared the artwork, or described it in social media posts, or even just upvoted it so it became more prominent in the global distributed database we call the internet.

Yes, I’m saying that humanity should be the artist of record.

To support this, I ask that you imagine an identical AI technology on some other planet, developed by some other intelligent species and trained on a database of their creative artifacts. The output might be visually pleasing to them, evocative and impactful. To us, it would probably be incomprehensible. I doubt we would recognize it as artwork.

In other words, without being trained on a database of humanity’s creative artifacts, an identical AI system would not generate anything that we recognize as emotional artwork. It certainly would not create the robot pictures in my example above. Hence, my assertion that humanity should be considered the artist of record for large-scale generative art.

If humanity is the artist, who should be compensated?

Had an artist or a team of artists created the robot pictures above, they would be compensated. Big budget films can be staffed with hundreds of artists across many disciplines, all contributing to a single piece of artwork, all of them compensated. But what about generative artwork created by AI systems trained on millions upon millions of creative human artifacts?

If we accept that the true artist is humanity itself, who should be compensated? Clearly the software companies that provide the generative AI tools along with the extensive computing power required to run the models deserve to receive substantial compensation. I have no regrets about paying the subscription fee that was required to generate the artwork examples above. It was reasonable and justified. But there were also vast numbers of humans who participated in the creation of that artwork, their contributions inherent in the massive set of original content that the AI system was trained on.

It’s reasonable to consider a “humanity tax” on generative systems trained on massive datasets of human artifacts. It could be a modest fee on transactions, maybe paid into a central “humanity fund” or distributed to decentralized accounts using blockchain. I know this sounds like a strange idea but think of it this way: If a spaceship full of entrepreneurial aliens showed up on Earth and asked humanity to contribute our collective works to a database so they could generate derivative artifacts for profit, we would likely ask for compensation.

Here on Earth, this is already happening. Without being asked for consent, we humans have contributed a massive set of collective works to some of the largest corporations this world has ever seen — corporations that can now build generative AI systems and use them to sell derivative content for a profit.

This suggests that a “humanity tax” is not a crazy idea but a reasonable first step in a world that is likely to use more and more generative AI tools in the coming years. And it won’t just be used for making quick images at the top of articles like this one. These methods will soon be used for everything from crafting dialog in conversational advertisements to generating videos, music, fashion, furniture, and of course, more artwork.

This article was reprinted with permission of Big Think, where it was originally published.