How do you train a robot to do your dishes? It’s a simple chore, but training a robot to understand all the different small tasks within it is extremely complex.

A new method developed by researchers at Carnegie Mellon University demonstrates a pretty straightforward strategy: Have the robot watch a bunch of videos of you doing it.

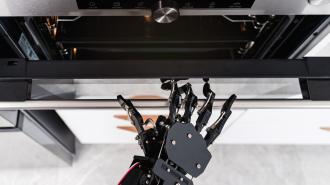

An innovative robot-learning technique: In a recent paper, researchers described how they trained two robots to successfully perform 12 household tasks, including removing a pot from a stove, opening drawers and cabinets, and interacting with objects like produce, knives, and garbage cans.

It’s maybe not surprising that modern robots can do these tasks. But what distinguishes the new approach is how the robots learned to perform them: by watching videos of humans interacting with objects in the kitchen.

Conventional strategies involve humans manually programming the robots to execute specific behaviors, or having robots learn new tasks in a simulated environment before trying them out in the real world. Both strategies are time-consuming and can be unreliable.

A newer technique — one used in earlier research by the Carnegie Mellon team — has robots learn tasks by watching humans perform them in a controlled setting. The new method doesn’t require people to go out of their way to model the behavior in front of the robot. Instead, the robots “watch” egocentric videos (read: camera on forehead) of humans performing specific tasks and then use that information to start practicing the task. They learned how to successfully execute it in about 25 minutes.

To train the robots, the team developed a model based on the concept of “affordances” — a psychological term that refers to what the environment offers us in terms of possible actions. For example, the handle of a skillet offers us the ability to easily pick it up.

By watching videos of people doing chores, the robots were able to recognize patterns of how humans are likely to interact with certain objects. They then used that information to predict which series of movements they need to execute in order to perform a certain task.

The team reported that their method, which was tested on two robots for more than 200 hours in “play kitchen” environments, outperformed prior approaches on real-world robotics tasks.

Notably, the robots didn’t just watch people do things over and over in the same kitchen, but rather many different kitchens — each of which might feature different looking pots and drawers. In other words, the robots learned to generalize the new skill.

It’s an ability made possible by advances in computer vision.

Computer vision: When it comes to teaching AI to perform tasks, it’s far easier to train a language-processing system like ChatGPT to write an essay than it is to train a robot to do the dishes. One reason: It’s hard to get a robot to move properly in physical space, and that’s especially true for novel spaces. After all, not all kitchens are the same.

But there’s also the problem of computer vision — the field of artificial intelligence that trains computers to “see.” For decades, it has proven remarkably difficult to train computers to derive meaningful information from images.

First, consider what goes into the process for humans. For example, when you enter a kitchen, it takes a split-second for you to visually recognize the sink, stove, refrigerator, cabinets, drawers, and other tools and appliances. You not only properly recognize these objects but also recognize how to use them — you recognize their affordances.

This skill doesn’t come naturally for a computer, which at first just “sees” thousands of individual pixels that altogether form the image of a kitchen. AI researchers have had to train computers to recognize patterns in pixels by showing them a ton of labeled images, so they can eventually learn to differentiate, for example, a stove from a sink. (You know all those “prove you’re not a robot, pick out the images with a school bus” CAPTCHAs? That’s you training algorithms like this.)

Still, correctly recognizing objects is only part of the battle.

“[E]ven with perfect perception, knowing what to do is difficult,” the researchers wrote. “Given an image, current computer vision approaches can label most of the objects, and even tell us approximately where they are but this is not sufficient for the robot to perform the task. It also needs to know where and how to manipulate the object, and figuring this out from scratch in every new environment is virtually impossible for all but the simplest of tasks.”

The team’s affordance-based method, which they call the “Vision-Robotics Bridge,” helps streamline the way robots learn simple tasks, largely by minimizing the amount of training data that researchers need to provide the robots. In the recent study, the training data came from a database of egocentric videos.

But lead study author Shikhar Bahl suggested in a press release that robots may soon be able to learn from a wider array of videos across the internet — though that might require computer-vision models that don’t rely on egocentric perspective.

“We are using these datasets in a new and different way,” Bahl said. “This work could enable robots to learn from the vast amount of internet and YouTube videos available.”